Load balancer:-

1. It

exposes the service both in and outside the cluster.

2. It

exposes the service externally using a cloud provider's load balancer.

NodePort and

ClusterIP services will be created automatically whenever the LoadBalancer

service is created.

3. The

LoadBalancer service redirects traffic to the node port across all the nodes.

4. The

external clients connect to the service through load balancer IP

5. This is the most preferable approach to expose service outside the cluster

Type of load-balance use in

Kubernetes :-

1. AWS loadbalancer.

2. metalLB,

MicroK8s and Traefik.

3. Haproxy.

4. nginx reverse Proxy LB.

i) AWS load-balance configure for k8s.

1.ELB setup

3. configure health check.

4. all node working InService.

5. check LB URL :-

ii) metalLB configure for k8s.

1. Layer 2 mode is the simplest to

configure: in many cases, you don’t need any protocol-specific configuration,

only IP addresses.

2. Layer 2 mode does not require the IPs to

be bound to the network interfaces of your worker nodes. It works by responding

to ARP requests on your local network directly, to give the machine’s MAC

address to clients.

1.Controller pods :- It provides IP to the

service.

2.speaker pods :- speaker pod working every

node. IP map with mac address.

If you’re using kube-proxy in IPVS mode,

since Kubernetes v1.14.2 you have to enable strict ARP mode. Note, you don’t

need this if you’re using kube-router as service-proxy because it is enabling

strict arp by default.

# kubectl edit configmap -n

kube-system kube-proxy

apiVersion:

kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

ipvs:

strictARP: true

# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/namespace.yaml

# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/metallb.yaml

# kubectl create secret generic -n

metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64

128)"

#

vim metallb-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

-

name: default

protocol: layer2

addresses:

- 172.31.24.220-172.31.24.250 --> public IP

Note :- Since I am using the CIDR for internal

calico networking for kubernetes cluster 172.31.24.0/24. I have used a range of

IP’s reserved for the Load Balancers.

# kubectl get svc

# kubectl apply -f metallb-configmap.yaml

# kubectl describe configmap -n metallb-system

# kubectl get all -n metallb-system

1.Controller pods :- It provide IP to

the service.

2.speaker

pods :- speaker pod working every node.

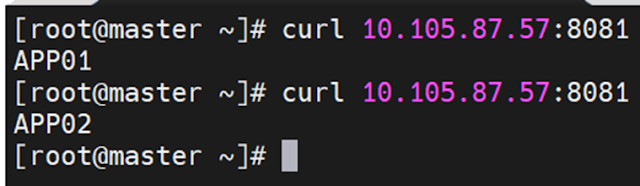

Create a load balancer.

# kubectl expose deploy nginx-deploy --port 80 --type LoadBalancer

OR

# vim nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

type: LoadBalancer

selector:

env: dev

ports:

-

port: 80

name:

http

# kubectl apply -f nginx-service.yaml

# kubectl get svc

for describe command.

# kubectl describe pod/controller-58f55bbb6c-scrbw -n metallb-system

for

logs command.

# kubectl logs pod/controller-58f55bbb6c-scrbw -n metallb-system

# kubectl describe service <service_name>

Speaker-jzjcm pods is working in worker2

# kubectl logs speaker-jzjcm -n

metallb-system

This IP will be map to mac address in worker2.

# ifconfig

BGP metal LB :-

There is no concept of ARP in BGP. The switch device must be aware

of every interface of the node. The switch and node interface communicate with

the BGP protocol.