Two servers

1.server –

install Prometheus and Grafana, AlertManager, push_gateway.

2.worker

node – install node_exporter, nginx_exporter, nginxlog exporter , blackbox exporter.

Server

Node :-

exporter

--> prometheus(promQL) --> grafana

Prometheus

:-

Prometheus is a monitoring tool designed for recording real-time

metrics in a time-series database. It is an open-source software project,

written in Go. The Prometheus metrics are collected using HTTP pulls, allowing

for higher performance and scalability.

Other tools which make Prometheus complete monitoring tool

are:

Exporters:- These are libraries that help with

exporting metrics from third-party systems as Prometheus.

1.Node-exporters :- Node Exporter is an 'official' exporter that collects technical information from Linux nodes, such as CPU, Disk, Memory statistics.

Pushgateway :- we will push some custom metrics to

pushgateway and configure prometheus to scrape metrics from pushgateway.

Alertmanager :- we would like to alarm based on

certain metric dimensions. That’s where alertmanager fits in. We can setup

targets and rules, once rules for our targets does not match, we can alarm to

destinations suchs as slack, email etc.

Blackbox exporter :- Blackbox Exporter to Monitor

Websites With Prometheus. Blackbox Exporter by Prometheus allows probing over

endpoints such as http, https, icmp, tcp and dns.

metrics:

i)Targets (linux,window,application) à cpu status, mem/disk usage,

request count à unit called a matric and

matric save in Prometheus DB.

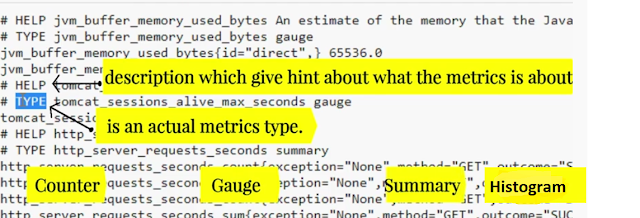

ii)metrics Format - Human-readable text-based.

HELP :- description of what the metrics is.

Type :- 4 metrics types.

1) counter :- How many times X happened.(only increase

value hogi, descrise nhi hogi.)

i)

number of requests served.

ii)tasks

completed or errors.

2) gauge :- what is the cuttent valume of X now?

(increase and descise dono hoga. cpu load now, disk space now.)

3) summary :- How long something took Or How big

something was

i)

Count shows number of time event observered.

ii)

sum shows sum of times taken by that event.

4) Histogram :- How long how big.

PromQL: Prometheus query language which allows you to

filter multi-dimensional time series data.

Grafana is a tool commonly used to visualize data

polled by Prometheus, for monitoring, and analysis. It is used to create

dashboards with panels representing specific metrics over a set period of time.

1.Create Prometheus system group

sudo groupadd --system prometheus

sudo useradd -s /sbin/nologin --system -g prometheus prometheus

2.Prometheus needs a directory to store its data.

sudo mkdir /var/lib/prometheus

for i in rules rules.d files_sd; do sudo mkdir -p

/etc/prometheus/${i}; done

sudo apt update

sudo apt -y install wget curl vim

3.Download Prometheus

mkdir -p /tmp/prometheus && cd /tmp/prometheus

wget

https://github.com/prometheus/prometheus/releases/download/v2.23.0/prometheus-2.23.0.linux-amd64.tar.gz

tar xvf prometheus*.tar.gz

cd prometheus*/

sudo mv prometheus promtool /usr/local/bin/

prometheus --version

promtool --version

sudo mv prometheus.yml /etc/prometheus/prometheus.yml

sudo mv consoles/ console_libraries/ /etc/prometheus/

4.Configure Prometheus

sudo vim /etc/prometheus/prometheus.yml

- job_name: 'prometheus'

# metrics_path

defaults to '/metrics'

# scheme

defaults to 'http'.

static_configs:

- targets:

['localhost:9090']

How to verify prometheus configuation file :-

5.Create a Prometheus systemd Service unit file

sudo vim /etc/systemd/system/prometheus.service

[Unit]

Description=Prometheus

Documentation=https://prometheus.io/docs/introduction/overview/

Wants=network-online.target

After=network-online.target

[Service]

Type=simple

User=prometheus

Group=prometheus

ExecReload=/bin/kill -HUP \$MAINPID

ExecStart=/usr/local/bin/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/var/lib/prometheus \

--web.console.templates=/etc/prometheus/consoles \

--web.console.libraries=/etc/prometheus/console_libraries \

--web.listen-address=0.0.0.0:9090

\ ## (using Private IP for security

purpose)

SyslogIdentifier=prometheus

Restart=always

[Install]

WantedBy=multi-user.target

OR

##########################

[Unit]

Description=Prometheus

Wants=network-online.target

After=network-online.target

[Service]

Type=simple

User=root

Group=root

ExecStart=/usr/local/bin/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/var/lib/prometheus \

--web.console.templates=/etc/prometheus/consoles \

--web.console.libraries=/etc/prometheus/console_libraries \

--web.enable-admin-api \

--web.enable-lifecycle

SyslogIdentifier=prometheus

Restart=always

[Install]

WantedBy=multi-user.target

######################

6.Change directory permissions.

for i in rules rules.d files_sd; do sudo chown -R prometheus:prometheus

/etc/prometheus/${i}; done

for i in rules rules.d files_sd; do sudo chmod -R 775

/etc/prometheus/${i}; done

sudo chown -R prometheus:prometheus /var/lib/prometheus/

7.Reload systemd daemon and start the service:

sudo systemctl daemon-reload

sudo systemctl start prometheus

sudo systemctl enable prometheus

sudo systemctl status prometheus

OR

#htpasswd -c /etc/nginx/.htpasswd admin

#vim /etc/nginx/sites-enabled/prometheus.conf

server {

listen 80

default_server;

location / {

auth_basic

"Prometheus Auth";

auth_basic_user_file /etc/nginx/.htpasswd;

proxy_pass

http://localhost:9090;

}

}

http://13.127.100.171/

Grafana side :-

1.Source add URL

2.Basic auth enable.

3.Add username and password

http://13.127.100.171:9090/

Note :-

if reload prometheus from client side.

#curl -X POST http://localhost:9090/-/reload

Install Grafana ubuntu 20.4

wget -q -O - https://packages.grafana.com/gpg.key | sudo apt-key add -

echo "deb https://packages.grafana.com/oss/deb stable main" | sudo tee -a /etc/apt/sources.list.d/grafana.list

sudo apt-get update

sudo apt-get install grafana

sudo systemctl start grafana-server

sudo systemctl enable grafana-server

sudo systemctl status grafana-server

Default logins are:

Username: admin

Password: admin

Grafana Package details:

Installs binary to /usr/sbin/grafana-server

Installs Init.d script to /etc/init.d/grafana-server

Creates default file

(environment vars) to /etc/default/grafana-server

Installs configuration

file to /etc/grafana/grafana.ini

Installs systemd

service (if systemd is available)

name grafana-server.service

The default

configuration sets the log file at

/var/log/grafana/grafana.log

The default

configuration specifies a sqlite3 db

at /var/lib/grafana/grafana.db

Installs HTML/JS/CSS

and other Grafana files at

/usr/share/Grafana

Install plugin cli

# grafana-cli plugins

install grafana-image-renderer

http://13.127.100.171:3000/login

Go to “data source” – add

data source – select Prometheus

Add Prometheus URL http://13.127.100.171:9090

Worker Node :-

Node exporter

# wget

https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gz

# tar -xf

node_exporter-0.17.0.linux-amd64.tar.gz

# cp

node_exporter-0.17.0.linux-amd64/node_exporter /usr/local/bin

# chown root:root /usr/local/bin/node_exporter

# rm -rf

node_exporter-0.17.0.linux-amd64*

node export default port

9100.

change port 9501

$ vim

/etc/systemd/system/node_exporter.service

[Unit]

Description=Node Exporter

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/local/bin/node_exporter

--web.listen-address=:9501

[Install]

WantedBy=multi-user.target

$ systemctl daemon-reload

$ systemctl start

node_exporter

$ systemctl enable

node_exporter

$ systemctl status

node_exporter

http://clientIP:9501/metrics

Server node :-

Add node exporter

target in prometheus.yml

# vim /etc/Prometheus/prometheus.yml

- job_name:

'prometheus'

# metrics_path

defaults to '/metrics'

# scheme

defaults to 'http'.

static_configs:

- targets:

['localhost:9090']

- job_name: 'node_example_com'

scrape_interval: 5s

static_configs:

- targets: ['172.31.39.204:9501']

# systemctl restart prometheus

# systemctl status Prometheus

Grafana :-

Nginx connection

Enable NGINX Status Page

# nginx -V 2>&1 | grep -o

with-http_stub_status_module

server {

listen 80

default_server;

# remove the

escape char if you are going to use this config

server_name \_;

root

/var/www/html;

index index.html

index.htm index.nginx-debian.html;

location /nginx_status {

stub_status;

# allow 0.0.0.0; #only allow requests from localhost

#

deny all; #deny all

other hosts

}

location / {

try_files $uri

$uri/ =404;

}

}

#cd /tmp

#wget

https://github.com/nginxinc/nginx-prometheus-exporter/releases/download/v0.7.0/nginx-prometheus-exporter-0.7.0-linux-amd64.tar.gz

#tar -xf

nginx-prometheus-exporter-0.7.0-linux-amd64.tar.gz

#mv nginx-prometheus-exporter /usr/local/bin

#useradd -r nginx_exporter

# Create Systemd Service File

#vim /etc/systemd/system/nginx_prometheus_exporter.service

[Unit]

Description=NGINX Prometheus Exporter

After=network.target

[Service]

Type=simple

User=nginx_exporter

Group=nginx_exporter

ExecStart=/usr/local/bin/nginx-prometheus-exporter

-web.listen-address=":9113" -nginx.scrape-uri

http://127.0.0.1/nginx_status

SyslogIdentifier=nginx_prometheus_exporter

Restart=always

[Install]

WantedBy=multi-user.target

#systemctl daemon-reload

#service nginx_prometheus_exporter status

#service nginx_prometheus_exporter start

Prometheus side :-

# vim /etc/prometheus/prometheus.yml

- job_name: 'nginx'

scrape_interval: 7s

static_configs:

- targets:

['172.31.39.204:9113']

Add Query and save

Change Visualization :-

Use plugin in Grafana for nginx service :

Code no :- 12708

https://grafana.com/grafana/dashboards/12708

Nginx stop in Worker node:-

Monitoring Nginx status count like 200, 300,404 from

different logs.

1)/var/log/nginx/access_shashi.log

2) /var/log/nginx/access.log

Worker node :-

# vim /etc/nginx/nginx.conf

# logging config

log_format custom '$remote_addr

- $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent"

"$http_x_forwarded_for"';

# rm -rf /etc/nginx/sites-enabled/default

# cat /etc/nginx/conf.d/myapp.conf

server {

listen 80

default_server;

# remove the

escape char if you are going to use this config

server_name \_;

root

/var/www/html;

index index.html

index.htm index.nginx-debian.html;

location / {

try_files $uri

$uri/ =404;

}

}

# cat /etc/nginx/conf.d/shashi.conf

server {

listen 81

default_server;

# remove the

escape char if you are going to use this config

server_name \_;

root

/var/www/html;

index index.html

index.htm index.nginx-debian.html;

access_log

/var/log/nginx/access_shashi.log custom;

error_log

/var/log/nginx/error_shashi.log;

location / {

try_files $uri

$uri/ =404;

}

}

# systemctl status nginx

# systemctl restart nginx

Download Nginx Log Exporter

# wget

https://github.com/martin-helmich/prometheus-nginxlog-exporter/releases/download/v1.4.0/prometheus-nginxlog-exporter

# chmod +x prometheus-nginxlog-exporter

# mv prometheus-nginxlog-exporter

/usr/bin/prometheus-nginxlog-exporter

# mkdir /etc/prometheus

# vim /etc/prometheus/nginxlog_exporter.yml

listen:

port: 4040

address:

"0.0.0.0"

consul:

enable: false

namespaces:

- name:

shashi_log

format:

"$remote_addr - $remote_user [$time_local] \"$request\" $status

$body_bytes_sent \"$http_referer\" \"$http_user_agent\"

\"$http_x_forwarded_for\""

source:

files:

-

/var/log/nginx/access_shashi.log

labels:

service:

"shashi_log"

environment:

"production"

hostname:

"shashi_log.example.com"

histogram_buckets:

[.005, .01, .025, .05, .1, .25, .5, 1, 2.5, 5, 10]

namespaces:

- name: myapp_log

format:

"$remote_addr - $remote_user [$time_local] \"$request\" $status

$body_bytes_sent \"$http_referer\" \"$http_user_agent\"

\"$http_x_forwarded_for\""

source:

files:

- /var/log/nginx/access.log

labels:

service:

"myapp"

environment:

"production"

hostname:

"myapp.example.com"

histogram_buckets: [.005, .01, .025, .05, .1, .25, .5, 1, 2.5, 5, 10]

# vim /etc/systemd/system/nginxlog_exporter.service

[Unit]

Description=Prometheus Log Exporter

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/bin/prometheus-nginxlog-exporter -config-file

/etc/prometheus/nginxlog_exporter.yml

[Install]

WantedBy=multi-user.target

# systemctl daemon-reload

# systemctl enable nginxlog_exporter

# systemctl restart nginxlog_exporter

# systemctl status nginxlog_exporter

curl http://localhost:4040/metrics

Server side :-

# vim /etc/prometheus/ prometheus.yml

- job_name:

'log_nginx'

scrape_interval: 10s

static_configs:

- targets: ['172.31.39.204:4040']

# systemctl restart Prometheus

# systemctl status Prometheus

eg :- <namespace>_http_response_count_total

Execute :- shashi_log_http_response_count_total

Execute :- myapp_http_response_count_total

Grafana :-

configuring-grafana-and-prometheus-alertmanager

Custom rules

1.How many memory free in percent for node.

1.Create Rule file .

# /etc/prometheus/rules/prometheus_rules.yml

groups:

- name:

custom_rules

rules:

- record:

node_memory_MemFree_percent

expr: 100 -

(100 * node_memory_MemFree_bytes / node_memory_MemTotal_bytes)

2.We will be check rule file.

# promtool check rules prometheus_rules.yml

3. prometheus_rules.yml file add in /etc/prometheus/

prometheus.yml

# vim /etc/prometheus/ prometheus.yml

rule_files:

- rules/prometheus_rules.yml

# systemctl

daemon-reload

# systemctl restart prometheus

# systemctl status prometheus

4. Go to Prometheus URL

# select status à

Configuration

# select à

Rules

# execute query – node_memory_MemFree_percent

Example 2 :-

Free disk space in percent

# vim /etc/prometheus/rules/prometheus_rules.yml

- record:

node_filesystem_free_percent

expr: 100 *

node_filesystem_free_bytes{mountpoint="/"} /

node_filesystem_size_bytes{mountpoint="/"}

# promtool check rules prometheus_rules.yml

# systemctl restart prometheus

# systemctl status prometheus

Alerts Rules :-

1.rule for instance Down

2.rule for DiskSpaceFree10Percent less

# vim /etc/prometheus/rules/prometheus_alert_rules.yml

groups:

- name:

alert_rules

rules:

- alert:

InstanceDown

expr: up ==

0

for: 1m

labels:

severity:

critical

annotations:

summary:

"Instance [{{ $labels.instance }}] down"

description: "[{{ $labels.instance }}] of job [{{ $labels.job }}]

has been down for more than 1 minute."

- alert:

DiskSpaceFree10Percent

expr:

node_filesystem_free_percent <= 10

labels:

severity:

warning

annotations:

summary: "Instance

[{{ $labels.instance }}] has 10% or less Free disk space"

description: "[{{ $labels.instance }}] has only {{ $value }}% or

less free."

# promtool check rules prometheus_alert_rules.yml

# vim /etc/Prometheus/prometheus.yml

rule_files:

-

rules/prometheus_rules.yml

-

rules/prometheus_alert_rules.yml

# systemctl

daemon-reload

# systemctl restart prometheus

# systemctl status Prometheus

Alert Manager Setup

# wget https://github.com/prometheus/alertmanager/releases/download/v0.21.0/alertmanager-0.21.0.linux-amd64.tar.gz

# tar xvf alertmanager-0.21.0.linux-amd64.tar.gz

# cd alertmanager-0.21.0.linux-amd64

# cp -rvf alertmanager /usr/local/bin/

# cp -rvf amtool /usr/local/bin/

# cp -rvf alertmanager.yml /etc/prometheus/

# vim

/etc/systemd/system/alertmanager.service

[Unit]

Description=Prometheus Alert Manager Service

After=network.target

[Service]

Type=simple

ExecStart=/usr/local/bin/alertmanager \

--config.file=/etc/prometheus/alertmanager.yml

[Install]

WantedBy=multi-user.target

Change alertmanager.yml

global:

resolve_timeout:

5m

route:

group_by:

['alertname']

receiver:

'email-me'

receivers:

- name: 'email-me'

email_configs:

- send_resolved:

true

to:

devopstest11@gmail.com

from:

devopstest11@gmail.com

smarthost:

smtp.gmail.com:587

auth_username:

"devopstest11@gmail.com"

auth_identity:

"devopstest11@gmail.com"

auth_password:

"pass@123"

# amtool check-config alertmanager.yml

# service alertmanager start

# service alertmanager status

#vim /etc/prometheus/prometheus.yml

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- localhost:9093

# systemctl restart prometheus

# systemctl status prometheus

http://13.127.100.171:9090/status

select status

à

Runtime & build information.

Worker node -

# systemctl stop node_exporter.service

Server node :-

Logs :

# tail -f

/var/log/syslog

Go to setting à

security

NOTE :- Less Secure app access :- ON

Worker node -

# systemctl start node_exporter.service

(receive mail issue resolved).

1.Inspect option for data insert from Prometheus and

Rename panel title from JSON.

inspect – (data ,

stats, JSON, Query)

2.How to restore old dashboard.

setting – version

3.manule add metrics

Add panel à

(panel name ) edit à

metrics

Pushgateway :-

In this tutorial, we will setup pushgateway on linux machine

and push some custom metrics to pushgateway and configure prometheus to scrape

metrics from pushgateway.

1.Install Pushgateway Exporter.

# wget https://github.com/prometheus/pushgateway/releases/download/v0.8.0/pushgateway-0.8.0.linux-amd64.tar.gz

# tar -xvf pushgateway-0.8.0.linux-amd64.tar.gz

# cp pushgateway-0.8.0.linux-amd64/pushgateway

/usr/local/bin/pushgateway

# chown root:root /usr/local/bin/pushgateway

# vim /etc/systemd/system/pushgateway.service

[Unit]

Description=Pushgateway

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/local/bin/pushgateway

[Install]

WantedBy=multi-user.target

# systemctl daemon-reload

# systemctl restart pushgateway

# systemctl status pushgateway

# vim /etc/prometheus/prometheus.yml

- job_name:

'pushgateway'

honor_labels: true

static_configs:

- targets:

['localhost:9091']

# systemctl restart prometheus

Run below command from Client side:-

# echo "cpu_utilization 20.25" | curl

--data-binary @- http://localhost:9091/metrics/job/my_custom_metrics/instance/client_host/cpu/load

Take a look at the metrics endpoint of the pushgateway:

# curl

-L

http://172.31.5.171:9091/metrics/

2>&1| grep "cpu_utilization"

## Pushgateway URL

## Go to Prometheus URL

BlackBox Exporter :-

Client side configuration of BlackBox.

# cd /opt

# wget

https://github.com/prometheus/blackbox_exporter/releases/download/v0.14.0/blackbox_exporter-0.14.0.linux-amd64.tar.gz

# tar -xvf blackbox_exporter-0.14.0.linux-amd64.tar.gz

# cp blackbox_exporter-0.14.0.linux-amd64/blackbox_exporter

/usr/local/bin/blackbox_exporter

# rm -rf blackbox_exporter-0.14.0.linux-amd64*

# mkdir /etc/blackbox_exporter

# vim /etc/blackbox_exporter/blackbox.yml

modules:

http_2xx:

prober: http

timeout: 5s

http:

valid_status_codes: []

method: GET

# vim /etc/systemd/system/blackbox_exporter.service

[Unit]

Description=Blackbox Exporter

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/local/bin/blackbox_exporter --config.file

/etc/blackbox_exporter/blackbox.yml

[Install]

WantedBy=multi-user.target

# systemctl daemon-reload

# systemctl start blackbox_exporter

# systemctl status blackbox_exporter

# systemctl enable blackbox_exporter

Note :- nginx is running 8281 and not running 8282 on

client side #

Prometheus server side :-

# vim /etc/prometheus/prometheus.yml

- job_name:

'blackbox'

metrics_path:

/probe

params:

module:

[http_2xx]

static_configs:

- targets:

- http://172.31.42.127:8281

-

http://172.31.42.127:8282

relabel_configs:

- source_labels:

[__address__]

target_label:

__param_target

- source_labels:

[__param_target]

target_label:

instance

- target_label:

__address__

replacement:

172.31.42.127:9115

# systemctl restart prometheus

# systemctl status prometheus

# verify blackBox exporter

# http://52.66.196.119:9115/metrics

# verify blackbox status from Prometheus .