Two servers

1.server – install Prometheus and Grafana, AlertManager, push_gateway.

2.worker

node – install node_exporter, nginx_exporter, nginxlog exporter , blackbox exporter.

exporter --> prometheus(promQL) --> grafana

Prometheus :-

Prometheus is a monitoring tool designed for recording real-time metrics in a time-series database. It is an open-source software project, written in Go. The Prometheus metrics are collected using HTTP pulls, allowing for higher performance and scalability.

Exporters:- These are libraries that help with exporting metrics from third-party systems as Prometheus.

i)Targets (linux,window,application) à cpu status, mem/disk usage, request count à unit called a matric and matric save in Prometheus DB.

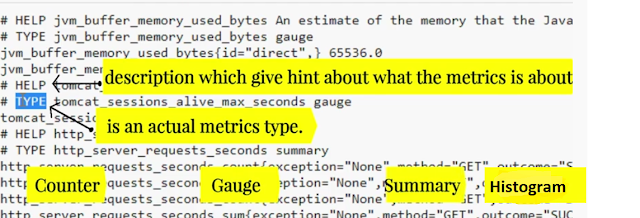

ii)metrics Format - Human-readable text-based.

HELP :- description of what the metrics is.

1) counter :- How many times X happened.(only increase value hogi, descrise nhi hogi.)

i) number of requests served.

ii)tasks completed or errors.

2) gauge :- what is the cuttent valume of X now? (increase and descise dono hoga. cpu load now, disk space now.)

3) summary :- How long something took Or How big something was

i) Count shows number of time event observered.

ii) sum shows sum of times taken by that event.

4) Histogram :- How long how big.

1.Create Prometheus system group

sudo groupadd --system prometheus

sudo useradd -s /sbin/nologin --system -g prometheus prometheus

sudo mkdir /var/lib/prometheus

for i in rules rules.d files_sd; do sudo mkdir -p /etc/prometheus/${i}; done

sudo apt update

sudo apt -y install wget curl vim

mkdir -p /tmp/prometheus && cd /tmp/prometheus

wget https://github.com/prometheus/prometheus/releases/download/v2.23.0/prometheus-2.23.0.linux-amd64.tar.gz

tar xvf prometheus*.tar.gz

cd prometheus*/

sudo mv prometheus promtool /usr/local/bin/

promtool --version

sudo mv consoles/ console_libraries/ /etc/prometheus/

sudo vim /etc/prometheus/prometheus.yml

- job_name: 'prometheus'

# scheme defaults to 'http'.

- targets: ['localhost:9090']

sudo vim /etc/systemd/system/prometheus.service

[Unit]

Description=Prometheus

Documentation=https://prometheus.io/docs/introduction/overview/

Wants=network-online.target

After=network-online.target

Type=simple

User=prometheus

Group=prometheus

ExecReload=/bin/kill -HUP \$MAINPID

ExecStart=/usr/local/bin/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/var/lib/prometheus \

--web.console.templates=/etc/prometheus/consoles \

--web.console.libraries=/etc/prometheus/console_libraries \

--web.listen-address=0.0.0.0:9090 \ ## (using Private IP for security purpose)

Restart=always

WantedBy=multi-user.target

Description=Prometheus

Wants=network-online.target

After=network-online.target

[Service]

Type=simple

User=root

Group=root

ExecStart=/usr/local/bin/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/var/lib/prometheus \

--web.console.templates=/etc/prometheus/consoles \

--web.console.libraries=/etc/prometheus/console_libraries \

--web.enable-admin-api \

--web.enable-lifecycle

SyslogIdentifier=prometheus

Restart=always

[Install]

WantedBy=multi-user.target

######################

for i in rules rules.d files_sd; do sudo chown -R prometheus:prometheus /etc/prometheus/${i}; done

for i in rules rules.d files_sd; do sudo chmod -R 775 /etc/prometheus/${i}; done

sudo chown -R prometheus:prometheus /var/lib/prometheus/

sudo systemctl daemon-reload

sudo systemctl start prometheus

sudo systemctl enable prometheus

sudo systemctl status prometheus

#htpasswd -c /etc/nginx/.htpasswd admin

server {

listen 80 default_server;

auth_basic "Prometheus Auth";

auth_basic_user_file /etc/nginx/.htpasswd;

proxy_pass http://localhost:9090;

}

}

1.Source add URL

2.Basic auth enable.

3.Add username and password

Note :-

if reload prometheus from client side.

#curl -X POST http://localhost:9090/-/reload

Install Grafana ubuntu 20.4

wget -q -O - https://packages.grafana.com/gpg.key | sudo apt-key add -

echo"deb https://packages.grafana.com/oss/deb stable main"|sudotee-a /etc/apt/sources.list.d/grafana.list

sudoapt-getupdate

sudoapt-getinstallgrafana

sudo systemctl start grafana-server

sudo systemctl enable grafana-server

sudo systemctl status grafana-server

Default logins are:

Username: admin

Password: admin

Grafana Package details:

Installs binary to /usr/sbin/grafana-server

Installs Init.d script to /etc/init.d/grafana-server

Creates default file

(environment vars) to /etc/default/grafana-server

Installs configuration

file to /etc/grafana/grafana.ini

Installs systemd

service (if systemd is available)

name grafana-server.service

The default

configuration sets the log file at

/var/log/grafana/grafana.log

The default

configuration specifies a sqlite3 db

at /var/lib/grafana/grafana.db

# grafana-cli plugins install grafana-image-renderer

Go to “data source” – add

data source – select Prometheus

Add Prometheus URL http://13.127.100.171:9090

Node exporter

# tar -xf node_exporter-0.17.0.linux-amd64.tar.gz

# cp node_exporter-0.17.0.linux-amd64/node_exporter /usr/local/bin

# chown root:root /usr/local/bin/node_exporter

# rm -rf node_exporter-0.17.0.linux-amd64*

change port 9501

Description=Node Exporter

Wants=network-online.target

After=network-online.target

User=root

Group=root

Type=simple

ExecStart=/usr/local/bin/node_exporter --web.listen-address=:9501

WantedBy=multi-user.target

$ systemctl start node_exporter

$ systemctl enable node_exporter

$ systemctl status node_exporter

Add node exporter target in prometheus.yml

- job_name: 'prometheus'

# scheme defaults to 'http'.

- targets: ['localhost:9090']

scrape_interval: 5s

static_configs:

- targets: ['172.31.39.204:9501']

# systemctl status Prometheus

Grafana :-

Enable NGINX Status Page

# nginx -V 2>&1 | grep -o with-http_stub_status_module

# remove the escape char if you are going to use this config

server_name \_;

index index.html index.htm index.nginx-debian.html;

# allow 0.0.0.0; #only allow requests from localhost

# deny all; #deny all other hosts

}

try_files $uri $uri/ =404;

}

#tar -xf nginx-prometheus-exporter-0.7.0-linux-amd64.tar.gz

#mv nginx-prometheus-exporter /usr/local/bin

#useradd -r nginx_exporter

# Create Systemd Service File

[Unit]

Description=NGINX Prometheus Exporter

After=network.target

Type=simple

User=nginx_exporter

Group=nginx_exporter

ExecStart=/usr/local/bin/nginx-prometheus-exporter -web.listen-address=":9113" -nginx.scrape-uri http://127.0.0.1/nginx_status

Restart=always

WantedBy=multi-user.target

#service nginx_prometheus_exporter status

#service nginx_prometheus_exporter start

- job_name: 'nginx'

scrape_interval: 7s

static_configs:

- targets: ['172.31.39.204:9113']

Add Query and save

Change Visualization :-

Use plugin in Grafana for nginx service :

Code no :- 12708

https://grafana.com/grafana/dashboards/12708

Nginx stop in Worker node:-

Monitoring Nginx status count like 200, 300,404 from

different logs.

1)/var/log/nginx/access_shashi.log

2) /var/log/nginx/access.log

Worker node :-

# vim /etc/nginx/nginx.conf

log_format custom '$remote_addr - $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" "$http_x_forwarded_for"';

# remove the escape char if you are going to use this config

server_name \_;

index index.html index.htm index.nginx-debian.html;

try_files $uri $uri/ =404;

}

server {

# remove the escape char if you are going to use this config

server_name \_;

index index.html index.htm index.nginx-debian.html;

error_log /var/log/nginx/error_shashi.log;

location / {

try_files $uri $uri/ =404;

}

# systemctl restart nginx

# wget https://github.com/martin-helmich/prometheus-nginxlog-exporter/releases/download/v1.4.0/prometheus-nginxlog-exporter

# mv prometheus-nginxlog-exporter /usr/bin/prometheus-nginxlog-exporter

port: 4040

address: "0.0.0.0"

enable: false

- name: shashi_log

format: "$remote_addr - $remote_user [$time_local] \"$request\" $status $body_bytes_sent \"$http_referer\" \"$http_user_agent\" \"$http_x_forwarded_for\""

source:

files:

- /var/log/nginx/access_shashi.log

service: "shashi_log"

environment: "production"

hostname: "shashi_log.example.com"

histogram_buckets: [.005, .01, .025, .05, .1, .25, .5, 1, 2.5, 5, 10]

- name: myapp_log

format: "$remote_addr - $remote_user [$time_local] \"$request\" $status $body_bytes_sent \"$http_referer\" \"$http_user_agent\" \"$http_x_forwarded_for\""

source:

files:

- /var/log/nginx/access.log

service: "myapp"

environment: "production"

hostname: "myapp.example.com"

histogram_buckets: [.005, .01, .025, .05, .1, .25, .5, 1, 2.5, 5, 10]

# vim /etc/systemd/system/nginxlog_exporter.service

[Unit]

Description=Prometheus Log Exporter

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/bin/prometheus-nginxlog-exporter -config-file /etc/prometheus/nginxlog_exporter.yml

[Install]

WantedBy=multi-user.target

# systemctl daemon-reload

# systemctl enable nginxlog_exporter

# systemctl restart nginxlog_exporter

# systemctl status nginxlog_exporter

curl http://localhost:4040/metrics

Server side :-

# vim /etc/prometheus/ prometheus.yml

- job_name:

'log_nginx'

scrape_interval: 10s

static_configs:

- targets: ['172.31.39.204:4040']

# systemctl restart Prometheus

# systemctl status Prometheus

eg :- <namespace>_http_response_count_total

Execute :- shashi_log_http_response_count_total

Execute :- myapp_http_response_count_total

Grafana :-

configuring-grafana-and-prometheus-alertmanager

Custom rules

1.How many memory free in percent for node.

# /etc/prometheus/rules/prometheus_rules.yml

groups:

- name: custom_rules

rules:

- record: node_memory_MemFree_percent

expr: 100 - (100 * node_memory_MemFree_bytes / node_memory_MemTotal_bytes)

2.We will be check rule file.

# promtool check rules prometheus_rules.yml

3. prometheus_rules.yml file add in /etc/prometheus/

prometheus.yml

# vim /etc/prometheus/ prometheus.yml

rule_files:

- rules/prometheus_rules.yml

# systemctl

daemon-reload

# systemctl restart prometheus

# systemctl status prometheus

4. Go to Prometheus URL

# select status à

Configuration

# select à

Rules

# execute query – node_memory_MemFree_percent

Free disk space in percent

# vim /etc/prometheus/rules/prometheus_rules.yml

- record: node_filesystem_free_percent

expr: 100 * node_filesystem_free_bytes{mountpoint="/"} / node_filesystem_size_bytes{mountpoint="/"}

# systemctl restart prometheus

# systemctl status prometheus

1.rule for instance Down

2.rule for DiskSpaceFree10Percent less

groups:

- name: alert_rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: critical

annotations:

summary: "Instance [{{ $labels.instance }}] down"

description: "[{{ $labels.instance }}] of job [{{ $labels.job }}] has been down for more than 1 minute."

expr: node_filesystem_free_percent <= 10

labels:

severity: warning

annotations:

summary: "Instance [{{ $labels.instance }}] has 10% or less Free disk space"

description: "[{{ $labels.instance }}] has only {{ $value }}% or less free."

# promtool check rules prometheus_alert_rules.yml

# vim /etc/Prometheus/prometheus.yml

rule_files:

-

rules/prometheus_rules.yml

- rules/prometheus_alert_rules.yml

# systemctl

daemon-reload

# systemctl restart prometheus

Alert Manager Setup

# tar xvf alertmanager-0.21.0.linux-amd64.tar.gz

# cd alertmanager-0.21.0.linux-amd64

# cp -rvf alertmanager /usr/local/bin/

# cp -rvf amtool /usr/local/bin/

# cp -rvf alertmanager.yml /etc/prometheus/

# vim

/etc/systemd/system/alertmanager.service

[Unit]

Description=Prometheus Alert Manager Service

After=network.target

[Service]

Type=simple

ExecStart=/usr/local/bin/alertmanager \

--config.file=/etc/prometheus/alertmanager.yml

[Install]

WantedBy=multi-user.target

Change alertmanager.yml

resolve_timeout: 5m

group_by: ['alertname']

receiver: 'email-me'

receivers:

- name: 'email-me'

email_configs:

- send_resolved: true

to: devopstest11@gmail.com

from: devopstest11@gmail.com

smarthost: smtp.gmail.com:587

auth_username: "devopstest11@gmail.com"

auth_identity: "devopstest11@gmail.com"

auth_password: "pass@123"

# service alertmanager start

# service alertmanager status

alerting:

alertmanagers:

- static_configs:

- targets:

- localhost:9093

# systemctl restart prometheus

# systemctl status prometheus

http://13.127.100.171:9090/status

select status à Runtime & build information.

# systemctl stop node_exporter.service

Logs :

# tail -f /var/log/syslog

NOTE :- Less Secure app access :- ON

Worker node -

# systemctl start node_exporter.service

(receive mail issue resolved).

inspect – (data , stats, JSON, Query)

2.How to restore old dashboard.

setting – version

3.manule add metrics

Add panel à (panel name ) edit à metrics

Pushgateway :-

In this tutorial, we will setup pushgateway on linux machine and push some custom metrics to pushgateway and configure prometheus to scrape metrics from pushgateway.

1.Install Pushgateway Exporter.

# wget https://github.com/prometheus/pushgateway/releases/download/v0.8.0/pushgateway-0.8.0.linux-amd64.tar.gz

# tar -xvf pushgateway-0.8.0.linux-amd64.tar.gz

# cp pushgateway-0.8.0.linux-amd64/pushgateway /usr/local/bin/pushgateway

# chown root:root /usr/local/bin/pushgateway

# vim /etc/systemd/system/pushgateway.service

[Unit]

Description=Pushgateway

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/local/bin/pushgateway

[Install]

WantedBy=multi-user.target

# systemctl daemon-reload

# systemctl restart pushgateway

# systemctl status pushgateway

# vim /etc/prometheus/prometheus.yml

- job_name: 'pushgateway'

honor_labels: true

static_configs:

- targets: ['localhost:9091']

# systemctl restart prometheus

Run below command from Client side:-

# echo "cpu_utilization 20.25" | curl --data-binary @- http://localhost:9091/metrics/job/my_custom_metrics/instance/client_host/cpu/load

Take a look at the metrics endpoint of the pushgateway:

# curl -L http://172.31.5.171:9091/metrics/ 2>&1| grep "cpu_utilization"

## Pushgateway URL

## Go to Prometheus URL

BlackBox Exporter :-

Client side configuration of BlackBox.

# cd /opt

# wget https://github.com/prometheus/blackbox_exporter/releases/download/v0.14.0/blackbox_exporter-0.14.0.linux-amd64.tar.gz

# tar -xvf blackbox_exporter-0.14.0.linux-amd64.tar.gz

# cp blackbox_exporter-0.14.0.linux-amd64/blackbox_exporter /usr/local/bin/blackbox_exporter

# rm -rf blackbox_exporter-0.14.0.linux-amd64*

# mkdir /etc/blackbox_exporter

# vim /etc/blackbox_exporter/blackbox.yml

modules:

http_2xx:

prober: http

timeout: 5s

http:

valid_status_codes: []

method: GET

# vim /etc/systemd/system/blackbox_exporter.service

[Unit]

Description=Blackbox Exporter

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/local/bin/blackbox_exporter --config.file /etc/blackbox_exporter/blackbox.yml

[Install]

WantedBy=multi-user.target

# systemctl daemon-reload

# systemctl start blackbox_exporter

# systemctl status blackbox_exporter

# systemctl enable blackbox_exporter

Note :- nginx is running 8281 and not running 8282 on client side #

Prometheus server side :-

# vim /etc/prometheus/prometheus.yml

- job_name: 'blackbox'

metrics_path: /probe

params:

module: [http_2xx]

static_configs:

- targets:

- http://172.31.42.127:8281

- http://172.31.42.127:8282

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 172.31.42.127:9115

# systemctl restart prometheus

# systemctl status prometheus

# verify blackBox exporter

# http://52.66.196.119:9115/metrics

# verify blackbox status from Prometheus .

No comments:

Post a Comment