YAML Basics:-

• YAML is not a Markup Language

• YAML is used to store information about

different things

• We can use YAML to define key, Value pairs like variables,

lists and objects

• YAML is very similar to JSON

(Javascript Object Notation)

• YAML primarily focuses on readability

and user friendliness

• YAML is designed to be clean and

easy to read

• We can define YAML files with two different extensions

abc.yml

abc.yaml

1.YAML Comments

2.YAML Key Value Pairs

3.YAML Dictionary or Map

4.YAML Array / Lists

5.YAML Spaces

6.YAML Document Separator

Step-01: Comments & Key Value Pairs

Space after the colon is mandatory to differentiate key and

value

# Defining

simple key-value pairs

Basic unit: key: value

name: motu

age: 23

city: noida

Use # to

add comments

# This is a comment

name: John #

Inline comment

Step-02: Dictionary / Map

Set of properties grouped together after an item

Equal amount of blank space is required for all the items

under a dictionary

person: # Dictionary

name: motu

age: 23

city: noida

Step-03: Array / Lists

Dash indicates an element of an array

Use - to denote each item in a list.

person: # Dictionary

name: motu

age: 23

city: Hyderabad

hobbies: # List

- cycling

- cookines

# Or inline style:

hobbies: [cycling,

cooking] # List with a different

notation

Step-04: Multiple Lists

Dash indicates an element of an array

person: # Dictionary

name: motu

age: 23

city: Hyderabad

hobbies: # List

- cycling

- cooking

hobbies: [cycling,

cooking] # List with a different notation

friends: # multiple lists

- name: friend1

age: 22

- name: friend2

age: 25

Step-05: YAML Spaces (Indentation)

- YAML

is space-sensitive (use spaces, not tabs).

- Indentation

indicates nesting.

- Standard:

2 spaces per level (not enforced, but common).

person:

name: John

address:

city: Mumbai

pin: 400001

Step-06: YAML Document Separator

- Use ---

to separate multiple documents in one file.

- ... (optional)

indicates the end of a document.

---

name: Alice

age: 25

---

name: Bob

age: 28

...

Step-07: Kubernetes YAML Top-level Objects

# Types of Kubernetes Objects

# Pod, ReplicaSet, Deployment, Service and many more

Pod API Objects Reference

https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.26/#pod-v1-core

apiVersion:

kind:

metadata:

spec:

# apiVersion: version of k8s objects

# kind: k8s objects

# metadata: define name and labels for k8s objects

# spec: specification or real definition for k8s objects

Step-08: Sample Pod Template for Reference

apiVersion: v1 # String

kind: Pod # String

metadata: # Dictionary

name: myapp-pod

labels: # Dictionary

app: myapp

spec:

containers: # List

- name: myapp

image:

stacksimplify/kubenginx:1.0.0

ports: # List

- containerPort: 80

Dry Run (syntax + context check without applying):

# kubectl apply -f yourfile.yaml --dry-run=client

Check specific resources:

# kubectl explain deployment

# kubectl explain deployment.spec

# kubectl apply -f 01-pod-definition.yml

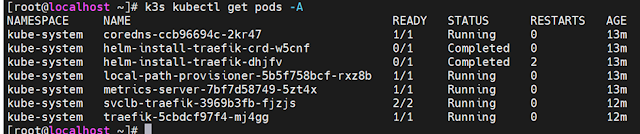

# kubectl get pods