What is GlusterFS ?

GlusterFS (Gluster File System) is an open source distributed file system that can scale out in building-block fashion to store multiple petabytes of data. ... Scale-out storage systems based on GlusterFS are suitable for unstructured data such as documents, images, audio and video files, and log files.

1) rpcbind ports :- 111

2) glusterd :- 24007

3) client :- 24009 - 24108

Different Types of Volumes :-

1) Replication volume.

2) Distributed Volume.

3) Striped Volume.

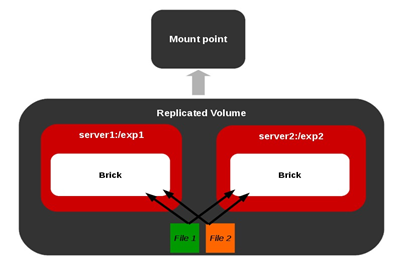

1)Replication volume.

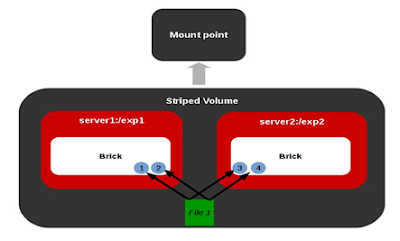

3) Striped Volume.

Node2 :- 172.31.38.176 (node2.example.com)

Client :- 172.31.13.233 (client.example.com)

172.31.39.204 node1.example.com node1

172.31.38.176 node2.example.com node2

172.31.13.233 client.example.com client

# apt -y install glusterfs-server

# systemctl enable --now glusterd

# systemctl status glusterd

# gluster --version

glusterfs 7.2

# apt-get -y install glusterfs-client

#apt-get -y install nfs-common

# systemctl status rpcbind

# cd /var/log/glusterfs/

bricks

cli.log

cmd_history.log

geo-replication

geo-replication-slaves

gfproxy

glusterd.log

glustershd.log

quota-mount-volume1.log

quota_crawl

quotad.log

snaps

# gluster peer probe node2

Both node1 and node2 servers

# mkdir -p /gluster_data

Create replication gluster method on node1

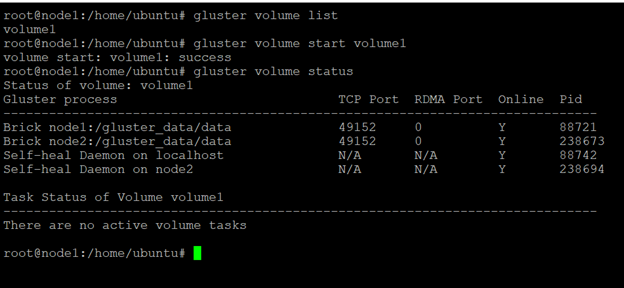

# gluster volume create volume1 replica 2 node1:/gluster_data/data node2:/gluster_data/data force

volume1

# gluster volume info

# gluster volume start volume1

# gluster volume status

#gluster volume info

Volume Name: volume1

Type: Replicate

Volume ID: 8feae0a4-0061-4e01-8f8a-90679a34b5cc

Status: Started

3.Client node :-

# mount -t glusterfs node1:volume1 /mnt/volume1

# cd /mnt/volume1/

# touch jk file test

OR

# mount -t nfs -o mountvers=3 node1:/voume1 /mnt/volume1

4.Check below path on both nodes :-

# ls /gluster_data/data/

file jk sk test

5.How to delete volume:-

# umount /mnt/volume1/

# gluster volume stop volume1

# gluster volume delete volume1

Helpful command for gluster :

# gluster --help

peer help - display help for peer

commands

volume help - display help for volume

commands

volume bitrot

help - display help for volume

bitrot commands

volume quota

help - display help for volume

quota commands

snapshot help - display help for snapshot commands

global help - list global commands

# gluster peer help

gluster peer commands

======================

peer detach { <HOSTNAME> | <IP-address> }

[force] - detach peer specified by <HOSTNAME>

peer help - display help for peer commands

peer probe { <HOSTNAME> | <IP-address> } -

probe peer specified by <HOSTNAME>

peer status - list status of peers

pool list - list all the nodes in the pool (including localhost)

# Usage: gluster [options] <help> <peer> <pool> <volume>

# gluster pool list

UUID

Hostname State

8b9116a8-3d6a-4ac3-afba-fb79c3beb2ff node2 Connected

39c6d1c1-3c01-498b-a536-731e9c7e78d4 localhost Connected

How to use quotas in Gluster FS volumes

# cd /mnt/volume1

# df -hP .

Filesystem

Size Used Avail Use% Mounted on

node1:volume1 7.7G 3.7G 4.1G 48% /mnt/volume1

1.check quota :-

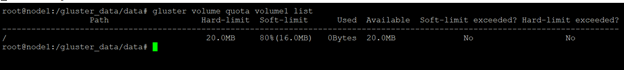

# gluster volume list

# gluster volume quota volume1 list

2. quota enable

# gluster volume quota volume1 enable

volume quota : success

3.create a quota for whole volume.

# gluster volume quota volume1 limit-usage / 20MB

4.list of quota

# gluster volume quota volume1 list

# gluster volume quota volume1 limit-usage /data1 10MB

# gluster volume quota volume1 limit-usage /data2 10MB

# df -hP .

Filesystem Size Used Avail Use% Mounted on

node1:volume1 20M 0 20M 0% /mnt/volume1

# df -hP .

# cd /mnt/volume1/data1

# dd if=/dev/urandom of=myfile1 bs=10M count=1

# du -sh myfile1

10M myfile1

# df -hP .

# gluster volume quota volume1 disable

# gluster volume add-brick volume1 node2:/gluster_data/data force

# gluster volume remove-brick volume1 node2:/gluster_data/data commit

# gluster volume rebalance volume1 start

4.Restricting client connections to Gluster FS volumes

# gluster volume start volume1

# gluster volume info

# gluster volume get volume1 auth.allow

OR

# gluster volume get volume1 all | less

Block IP, hostname, domain, IP Rang.

# gluster volume set volume1 auth.reject client.example.com

# gluster volume get volume1 auth.reject

root@client:~# mount | grep "gluster"

check node1

# gluster volume reset volume1 auth.reject

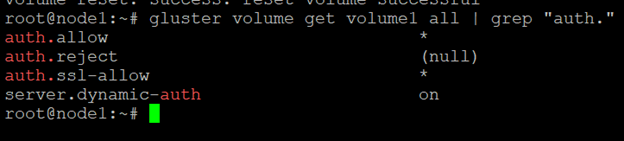

# gluster volume get volume1 all | grep "auth."

No comments:

Post a Comment