i).Checking Cluster Health

1.Check Cluster Status

# kubectl cluster-info

2.Check Node Health

# kubectl get nodes

# kubectl describe node <node-name>

3.Check Pods in the kube-system Namespace

# kubectl get pods -n kube-system

# kubectl describe pod <pod-name> -n kube-system

4.debug a failing pod.

# kubectl logs <pod-name> -n kube-system

5.Check Component Status.

# kubectl get componentstatuses

6.Check API Server Health.

# kubectl get --raw /readyz

(It should return ok

if the API server is healthy.)

7.check API server logs.

# kubectl logs -n kube-system -l component=kube-apiserver

8.Kubelet logs on the Node.

# journalctl -u kubelet -f

ii). Troubleshooting

Pods Issues

1.View logs of a Pod.

Exit Code 1: Application error.

Exit Code 137: OOMKilled (Out of Memory).

Exit Code 126: Permission denied.

Exit Code 127: Command not found.

# kubectl run nginx --image=nginx --port=80

# kubectl logs <pod-name>

# kubectl logs <pod-name> -n <namespace>

# kubectl logs <pod-name> -n <namespace> -c

<container-name>

# kubectl logs <pod-name> -n <namespace> -c

<container-name> --previous

# kubectl logs --since=1h <pod-name>

# kubectl logs --tail=20 <pod-name>

# kubectl logs <pod-name> > pod.log

2.Get detailed information about a Pod.

# kubectl describe pod my-pod -n my-namespace

3.List Pods and their statuses.

Running: Pod is working fine.

Pending: Pod is waiting for resources.

CrashLoopBackOff: The container keeps crashing and

restarting.

ImagePullBackOff / ErrImagePull: Issues pulling the

image.

OOMKilled: Container ran out of memory.

Completed: Pod has finished its job (for jobs or init

containers).

Terminating: Pod

is shutting down (might be stuck).

# kubectl get pods -n <namespace>

# kubectl get pods -A

# kubectl get pods --all-namespaces

4.Open a shell inside a running container.

# kubectl exec -it <pod-name> -- /bin/bash

# kubectl exec -it <pod-name> -n <namespace> -- /bin/bash

# kubectl exec -it <pod-name> -n <namespace> --

/bin/sh

5.Forward a local port to a Pod.

# kubectl port-forward my-app-pod -n my-namespace 8080:8080

6.Debugging with temporary container.

# kubectl debug -it my-pod --image=busybox

--target=my-container

7.Check Events for Errors.

# kubectl get events --sort-by=.metadata.creationTimestamp

# kubectl get events --sort-by=.metadata.creationTimestamp

-n <namespace>

# kubectl get events -w

# kubectl get events

# kubectl get events -n kube-system

8.Check CPU or memory pods.

# kubectl top pods -n <namespace>

9.Check DNS Resolution Issues.

# kubectl exec -it <pod-name> -- nslookup google.com

# kubectl exec -it <pod-name> -- cat /etc/resolv.conf

# kubectl get pods -n kube-system -l k8s-app=kube-dns

# kubectl logs -n kube-system -l k8s-app=kube-dns

10.Restart CoreDNS if needed.

# kubectl rollout restart deployment coredns -n kube-system

11.Pod Stuck in Terminating.

# kubectl delete pod <pod-name> --grace-period=0

--force -n <namespace>

# kubectl patch pod <pod-name> -n <namespace> -p

'{"metadata":{"finalizers":null}}'

12.Generating Kubernetes YAML Files Using Commands.

For Deployment

# kubectl create deployment nginx-deployment --image=nginx

--replicas=2 --dry-run=client -o yaml > nginx-deployment.yaml

For Pod.

# kubectl run my-pod --image=nginx --restart=Never

--dry-run=client -o yaml > my-pod.yaml

For Service (NodePort).

# kubectl expose deployment my-app --port=80 --type=NodePort

--dry-run=client -o yaml > my-service.yaml

For configMap.

# kubectl create configmap my-config

--from-literal=key1=value1 --dry-run=client -o yaml > my-config.yaml

For Secret.

# kubectl create secret generic my-secret

--from-literal=username=admin --from-literal=password=1234 --dry-run=client -o

yaml > my-secret.yaml

13.Understanding --dry-run=client

Check if a YAML File is Correct Before Creation

# kubectl create -f first_pod.yaml --dry-run=client

Check if Applying a YAML Will Work

# kubectl apply -f first_pod.yaml --dry-run=client

14.check pods IP address.

# kubectl get pods -o wide

15.List All Pods with Labels.

# kubectl get pods --show-labels

# kubectl get pod <pod-name> --show-labels

3.Troubleshooting

Node Issues

1.Check Node Status.

When you run this command, you’ll see a list of all the

nodes in your cluster, along with their current status. The status can be one

of the following:

- Ready:

This is the ideal state. It means that the node is healthy and ready to

accept and run containers.

- NotReady:

This status indicates that the node is not functioning correctly or is

experiencing issues that prevent it from running containers. Nodes in this

state might have resource constraints, network problems, or other issues.

- SchedulingDisabled:

This status means that the node is explicitly marked as unschedulable,

preventing new containers from being scheduled on it. This can be useful

for maintenance or troubleshooting purposes.

- Unknown:

In some cases, the node’s status might be unknown due to communication

problems with the Kubernetes control plane.

# kubectl get nodes

2.Resource Metrics. (Metrics API required.)

# kubectl top nodes

# kubectl top pods -n my-namespace

3.Get Detailed Node Information.

# kubectl describe node <node-name>

4.Check Kubelet Logs

# journalctl -u kubelet -f --no-pager

5.Restart Kubelet and Docker/Container Runtime.

# systemctl status kubelet

# systemctl status containerd

# systemctl status docker

6.Verify Network Connectivity from node.

# ping <API-SERVER-IP>

# curl -k https://<API-SERVER-IP>:6443/healthz

7.List All Nodes with Their Labels.

# kubectl get nodes --show-labels

# kubectl get node <node-name> --show-labels

# kubectl get nodes -l env=prod

8.Remove a Failed Node.

# kubectl drain <node-name> --ignore-daemonsets

--delete-emptydir-data

# kubectl delete node <node-name>

iv).Troubleshooting

Networking Issues

1.Check Pod Networking

# kubectl get pods -o wide

# kubectl get nodes -o wide

# kubectl get pods -o wide -n <namespace>

2.Restart the network plugin (CNI)

# systemctl restart kubelet

# kubectl get pods -n kube-system

# kubectl get pods -n kube-system | grep cni

# kubectl get pods -n kube-system -l k8s-app=calico-node

# kubectl get pods -n kube-system -l k8s-app=flannel

# kubectl get pods -n kube-system -l k8s-app=cilium

# kubectl logs -n kube-system -l k8s-app=calico-node

# kubectl logs -n kube-system -l k8s-app=flannel

# kubectl logs -n kube-system -l k8s-app=cilium

# ls /etc/cni/net.d/

# cat /etc/cni/net.d/10-calico.conf

3.Restart CNI Pods

# kubectl delete pod -n kube-system -l k8s-app=calico-node

Common Networking Issues

i). Test

Pod-to-Pod Communication.

# kubectl exec -it <pod-name> -n <namespace> -- ping

<another-pod-ip>

# kubectl exec -it <pod-name> -n <namespace> --

ping <destination-pod>.<destination-namespace>.svc.cluster.local

# iptables -L -v -n

# kubectl logs -n kube-system -l k8s-app=kube-dns

restart CoreDNS:

# kubectl rollout restart deployment coredns -n kube-system

# kubectl get pods -n kube-system -l k8s-app=kube-proxy

# kubectl rollout restart ds kube-proxy -n kube-system

# kubectl logs -n kube-system -l k8s-app=kube-proxy

ii)Network

Policies Blocking Traffic.

# kubectl get networkpolicy -n <namespace>

# kubectl describe networkpolicy <policy-name> -n

<namespace>

# kubectl delete networkpolicy <policy-name> -n

<namespace>

iii).Check

Node-to-Node Networking.

# kubectl get nodes -o wide

# ping <node-ip>

# kubectl logs -n kube-system -l k8s-app=<cni-plugin>

iv)DNS

Resolution Failures.

# kubectl get pods -n kube-system -l k8s-app=kube-dns

# kubectl get pods -n kube-system | grep coredns

# kubectl exec -it <pod-name> -- nslookup google.com

Restart CoreDNS

# kubectl delete pod -n kube-system -l k8s-app=kube-dns

# systemctl restart kubelet

v). External

Traffic Not Reaching Services

# kubectl get svc -n <namespace>

|

Service

Type |

How

External Traffic Works |

|

ClusterIP |

Only

accessible inside the cluster |

|

NodePort |

Accessible

on each node's external IP on a specific port |

|

LoadBalancer |

Uses a

cloud provider’s LB to expose the service |

|

Ingress |

Routes

external traffic to a service based on hostname/path |

# kubectl get endpoints -n <namespace>

# kubectl describe svc my-service -n <namespace>

restart kube-proxy:

# kubectl rollout restart ds kube-proxy -n kube-system

# kubectl get events -n <namespace>

# kubectl get ingress -n <namespace>

# kubectl logs -n kube-system -l

app.kubernetes.io/name=ingress-nginx

# kubectl describe ingress my-ingress -n <namespace>

# kubectl rollout restart ds kube-proxy -n kube-system

# kubectl rollout restart ds calico-node -n kube-system # If using Calico systemctl restart kubelet

5.Troubleshooting

API Server Issues

# kubectl version

Client Version provides information about your

local kubectl client.

Server Version provides information about the

Kubernetes API server to which your kubectl client is connected.

Ensure that the client version matches or is compatible with

the server version.

1.Check API Server Status

# kubectl get componentstatuses

2.Check API Server Logs

# journalctl -u kubelet -f

3.Check API Server Status on Control Plane Nodes.

# crictl ps

4.Check API Server Health Endpoint.

# kubectl get --raw /readyz

# kubectl get --raw /livez

# kubectl get --raw

/healthz

5.Verify etcd is Healthy.

# ETCDCTL_API=3 etcdctl --endpoints=<etcd-endpoint>

endpoint health

6.Check Certificate Issues.

# kubeadm certs check-expiration

7.Renew certificates if needed.

# kubeadm certs renew all

# systemctl restart kube-apiserver

8.Verify RBAC Configuration.

# kubectl auth can-i get pods --as <user>

If denied, check and fix RBAC policies:

# kubectl get clusterrolebindings

# kubectl get roles -n <namespace>

# kubectl get rolebindings -n <namespace>

9.Check for High CPU or Memory Usage.

# kubectl top nodes

# kubectl top pods -n kube-system

10.Viewing Cluster Events

# kubectl get events --all-namespaces

# kubectl get events

# kubectl get events --sort-by=.metadata.creationTimestamp

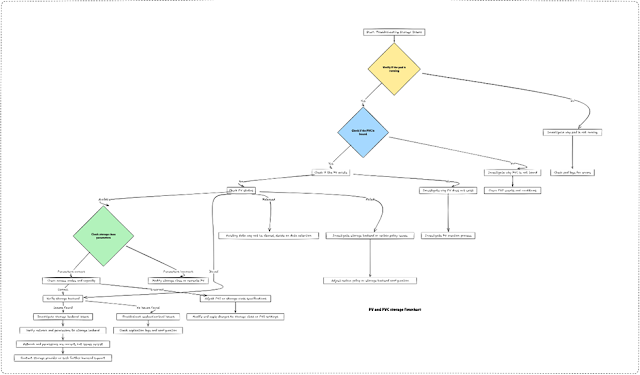

6.Troubleshooting Storage Issues

1.PVC Stuck in Pending State

# kubectl get pvc -n <namespace>

# kubectl describe pvc <pvc-name> -n <namespace>

# kubectl get storageclass

# kubectl describe storageclass <storageclass-name>

# kubectl get pv

2.Pod Failing to Mount Volumes

# kubectl get pvc -n <namespace>

# kubectl describe pvc <pvc-name> -n

<namespace>

# kubectl get pod <pod-name> -n

<namespace> -o yaml

# kubectl describe pod <pod-name> -n <namespace>

3.Data Not Accessible or Lost

# kubectl describe pod <pod-name> -n <namespace>

4.Storage Class Not Working

# kubectl get storageclass

# kubectl describe storageclass

<storageclass-name>

# kubectl get pods -n kube-system | grep

csi

# kubectl delete pod <csi-plugin-pod> -n kube-system

5.Volume Mount Permissions Issues

# kubectl exec -it <pod-name> -n <namespace> -- ls -l /path/to/mount

# kubectl get pod <pod-name> -n <namespace> -o yaml

6.Storage Provider-Specific Issues

# kubectl describe pvc <pvc-name> -n <namespace>

# kubectl get all --all-namespaces

# kubectl get all -A

Check Specific Resources

|

Resource |

Command |

|

Pods |

kubectl get pods -A |

|

Services |

kubectl get svc -A |

|

Deployments |

kubectl get deployments -A |

|

StatefulSets |

kubectl get statefulsets -A |

|

ReplicaSets |

kubectl get replicasets -A |

|

DaemonSets |

kubectl get daemonsets -A |

|

Jobs |

kubectl get jobs -A |

|

CronJobs |

kubectl get cronjobs -A |

|

Persistent

Volumes (PV) |

kubectl get pv |

|

Persistent

Volume Claims (PVC) |

kubectl get pvc -A |

|

ConfigMaps |

kubectl get configmaps -A |

|

Secrets |

kubectl get secrets -A |

|

Ingress |

kubectl get ingress -A |

No comments:

Post a Comment