|

Labels |

Labels are key-value pairs attached to

Kubernetes objects (such as pods, services, and deployments) as

metadata |

|

Pods selector |

The Selector matches the label. Labels and

selectors are required, to make connections between deployment, pods, and

services. |

|

Node selector |

A node selector specifies a map of key/value

pairs that are defined using custom labels on nodes and selectors specified

in pods. |

|

Node Affinity |

Node affinity is a more advanced feature

that provides fine-grained control over pod placement. |

|

Pod Affinity |

Pod affinity allows co-location by scheduling

Pods onto nodes that already have specific Pods running. |

|

Anti Affinity |

If the node does not have a redis-cache pod, it will deploy a redis-cache

pod and will ignore nodes that already have a redis-cache pod. |

|

Taints |

Taints are applied to the nodes. |

|

Tolerations |

Tolerations allow the scheduler to schedule

pods with matching taints. |

### NodeSelector ###

This is a simple Pod scheduling feature that

allows scheduling a Pod onto a node whose labels match the nodeSelector labels

specified by the user.

There are some scenarios when

you want your Pods to end up on specific nodes.

1) You want your Pod(s) to end up on

a machine with the SSD attached to it.

2) You want to co-locate Pods on a

particular machine(s) from the same availability zone.

3) You want to co-locate a Pod from

one Service with a Pod from another service on the same node because these

Services strongly depend on each other. For example, you may want to place a

web server on the same node as the in-memory cache store like Memcached (see

the example below).

To work with nodeSelector, we first

need to attach a label to the node with below command:

# kubectl label nodes

<node-name> <label-key>=<label-value>

How to add lables

in nodes :-

# kubectl label nodes worker02 disktype=ssd

How to remove

labels from nodes :-

kubectl label nodes worker02 disktype-

# kubectl get nodes worker02 --show-labels | grep "disktype=ssd" (to verify the attached labels)

# kubectl get nodes -l disktype=ssd

# vim

nginx_pods.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypods

spec:

containers:

- name: nginx-container

image: nginx

nodeSelector:

disktype: ssd

# kubectl apply -f nginx_pods.yaml

# kubectl describe pods mypods | grep

"Node-Selectors"

#### Node Affinity

(for node) ####

This is the enhanced version

of the nodeSelector introduced in Kubernetes 1.4 in beta. It offers a

more expressive syntax for fine-grained control of how Pods are scheduled to

specific nodes.

1) Node Affinity has more flexibility than node selector.

2) Node Affinity supports several types of rules:

i) RequiredDuringSchedulingIgnoredDuringExecution: Specifies a hard requirement for pod scheduling. Pods with this affinity rule must be scheduled onto nodes that match the defined criteria. If no suitable nodes are available, the pod won't be scheduled.

ii) PreferredDuringSchedulingIgnoredDuringExecution: Specifies that the scheduler should attempt to schedule pods onto nodes that match the specified criteria, but it's not a strict requirement. If no suitable nodes are available, the scheduler can still schedule the pod onto other nodes.

default node label's.

We used the In operator in this manifest, but node affinity supports the following operators:

- In

- NotIn

- Exists

- DoesNotExist

- Gt

- Lt

Weight:- The weight field is used to define the priority of the conditions. Weight can be any value between 1 to 100. Kubernetes scheduler will prefer a node with the highest calculated weight value corresponding to the matching conditions.

1. first use case:- (hard preference) Will match node labels with pods, then schedule the pod on the node.

This configuration ensures that the Pod is scheduled only on nodes that have a label disk with a value of ssd2. If there are no nodes with this label, the Pod will remain in a pending state.

# vim nodeAffinity-required.yaml

apiVersion: v1

kind: Pod

metadata:

name: kplabs-node-affinity

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disk

operator: In

values:

- ssd2

containers:

- name: with-node-affinity

image: nginx

2. second use case:- (Soft preference) Schedule any node if the label is not available in the node or does not match the node label. It doesn't go to a pending state.

This configuration prefers to schedule pods on nodes that have high or medium value label memory. If there is no node with this label or if all preferred nodes are unavailable, Kubernetes can schedule pods on the nodes.

apiVersion: v1

kind: Pod

metadata:

name: node-affinity-preferred

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: memory

operator: In

values:

- high

- medium

containers:

- name: affinity-prefferd

image: nginx

# kubectl get pods -o wide

Or condition

applied on pods affinity.

This feature addresses the third

scenario above. Inter-Pod affinity allows co-location by scheduling Pods onto

nodes that already have specific Pods running.

1. All redis pods

must be deployed in us-east-1a zone and one nodes.

# kubectl label nodes worker01

kubernetes.io/zone=us-east-1a

# kubectl label nodes worker02 kubernetes.io/zone=us-east-2a

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-cache

spec:

selector:

matchLabels:

security: S1

replicas: 2

template:

metadata:

labels:

security: S1

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security

operator: In

values:

- S1

topologyKey: "kubernetes.io/zone"

containers:

- name: redis-server

image: redis:3.2-alpine

affinity: This is the section where you define pod

affinity rules.

podAffinity: Specifies that you are defining rules related

to other pods.

requiredDuringSchedulingIgnoredDuringExecution: Indicates that the rule must be met for pod

scheduling to occur, but it can be ignored during execution if it's no longer

met.

labelSelector: Defines the label selector that must be

satisfied by other pods for this pod to be scheduled close to them.

matchExpressions: Allows you to specify label matching

expressions.

key: app: Specifies the label key that must be matched.

operator: In: Specifies the operator used to match the

label. In this case, it matches if the label value is one of the specified

values.

values: store: Specifies the value(s) that the label must

have to satisfy the match expression.

topologyKey: "kubernetes.io/zone": Specifies the topology domain which the rule operates in. In this case, the rule ensures that pods are scheduled close to other pods in the same availability zone.

# kubectl get pods -o wide

# kubectl get pods --show-labels

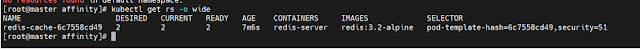

# kubectl get deployments.apps -o wide

# kubectl get rs -o wide

### Pods Anti Affinity ###

If the node does

not have a redis-cache pod, it will deploy a redis-cache pod.

will ignore nodes

that already have a redis-cache pod.

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-cache

spec:

selector:

matchLabels:

app: store

replicas: 2

template:

metadata:

labels:

app: store

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- store

topologyKey: "kubernetes.io/hostname"

containers:

- name: redis-server

image: redis:3.2-alpine

# kubectl get pods -o wide

There is already a pod running on node-1

whose label name is app=store. If you run another pod with the same

label name app=store then it will be deployed on node-2.

First practical for redis-cache deploy

each node and Where redis-cache is running, one Nginx pod has to be run.

# web-server.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: web-store

replicas: 2

template:

metadata:

labels:

app: web-store

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- web-store

topologyKey:

"kubernetes.io/hostname"

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- store

topologyKey:

"kubernetes.io/hostname"

containers:

- name: web-app

image: nginx:1.16-alpine

# kubectl apply -f web-server.yaml

#### Taints and Tolerations ###

1.Taints and tolerations are

mechanisms used to control pod placement and scheduling on nodes within a

cluster.

2.One or more taints are applied to a

node, this marks that the node should not accept any pods that do not tolerate

the taints.

3.Taints are applied to the nodes and Tolerations are applied to pods.

i) Taints

|

Equal |

This operator matches the exact key-value pair of the taint. |

|

Exists |

This operator matches any taint key regardless of its value. |

|

ExistsWithValue |

This operator matches the presence of a taint key with any

value. |

1.Apply a Taint

to the Node:

# kubectl describe node worker02 |

grep "Taints"

# kubectl describe node worker02 |

grep "Taints"

This command applies a taint with the

key env and value prod to the node worker02. The NoSchedule

effect ensures that pods without compatible tolerances will not be scheduled on

this node.

2.Create a Pod

with a Toleration:

# vim firstpod.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-nginx-pod

spec:

containers:

- name: nginx-container

image: nginx

tolerations:

- key: "env"

operator: "Equal"

value: "prod"

effect: "NoSchedule

# kubectl apply -f firstpod.yaml

# kubectl get pods -o wide

# kubectl describe pods

my-nginx-pod | grep "Tolerations"

If you do not use tolerance with pods,

then the pods will be deployed on worker01 node.

# kubectl run

redis-pods --image=redis --restart=Never

# kubectl get

pods -o wide

# kubectl

describe pods redis-pods | grep

"Tolerations"

How to remove taints

from node use (-)

# kubectl taint nodes worker02

env=prod:NoSchedule-

Taint worker nodes to run only specific workloads (e.g., GPU workloads).

# kubectl taint nodes gpu-node hardware=gpu:NoSchedule

NoExecute to evict non-critical pods.- Kubernetes will try to avoid scheduling new pods on a tainted node.

- Unlike

NoSchedule, it is not a hard restriction—if no other suitable nodes are available, Kubernetes may still schedule pods on the tainted node. - Existing pods on the node are not affected, and they will not be evicted (unlike

NoExecute).

PreferNoSchedule

apiVersion: v1kind: Podmetadata: name: prefer-noschedule-podspec: tolerations: - key: "low-priority" operator: "Equal" value: "true" effect: "PreferNoSchedule" containers: - name: nginx image: nginx

This pod can be scheduled on a node with low-priority=true:PreferNoSchedule, but Kubernetes will still prefer other nodes if available.

No comments:

Post a Comment