A). How Static Persistent Volume (PV) and Persistent Volume Claim (PVC) work with HostPath in Kubernetes.

- A hostPath volume mounts a file or directory from the host node’s filesystem into your pod.

- A hostPath PersistentVolume must be used only in a single-node cluster. Kubernetes does not support hostPath on a multi-node cluster currently.

i) First create a PV for hostpath.

# hostpath-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-hostpath

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/shashi_data"

# kubectl apply -f hostpath-pv.yaml

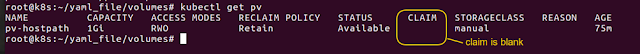

# kubectl get pv

ii) Create a PVC for hostpath.

# cat hostpath-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-hostpath

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Mi

# kubectl apply -f hostpath-pvc.yaml

# kubectl get pvc

iii) create a pod with hostpath.

# cat busy_box.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

volumes:

- name: host-volume

persistentVolumeClaim:

claimName: pvc-hostpath

containers:

- image: busybox

name: busybox

command: ["/bin/sh"]

args: ["-c", "sleep 600"]

volumeMounts:

- name: host-volume

mountPath: /mydata

# kubectl apply -f busy_box.yaml

# kubectl get pods -o wide

# kubectl exec busybox -- ls /mydata

# kubectl exec busybox -- touch /mydata/test123

go to woker2 node.

# ls /shashi_data/

B). How to schedule HostPath in select nodes?

# kubectl apply -f hostpath-pv.yaml

# kubectl apply -f hostpath-pvc.yaml

Check node labels.

# kubectl get node worker1 --show-labels

Labels are added at the woker1 node.

# kubectl label node worker1 node_worker=true

# kubectl get node worker1 --show-labels

# vim busy_box.yaml

# kubectl get pods -o wide

# kubectl exec busybox -- touch /mydata/test71

# kubectl exec busybox -- ls /mydata

test71

# ls /shashi_data/

C). How to reuse a PersistentVolume (PV) in kubernetes.

# kubectl apply -f hostpath-pvc.yaml

How to fix “manual not found” Error

Edit the PV to remove the claimRef field, which ties the PV to the previous PVC:

# kubectl edit pv pv-hostpath

# kubectl get pv

# kubectl apply -f hostpath-pvc.yaml

# kubectl get pv

# kubectl get pvc

# kubectl describe pvc pvc-hostpath

D). Create Static Persistent Volume PV and PVC for EBS AWS.

1.We will manually create EBS volumes and create volumes in the same zone (Availability Zone : us-east-2b) as the cluster node.

2. Mount the volume vol-0cf28e25fd0db918e in the node and format the volume with ext4 filesystem. Will then Detach Volume from node.

Disk → PV → PVC ← Pods

i) Create a PV for ebs .

# vim ebs-pv.yaml

# kubectl apply -f ebs-pv.yaml

# kubectl get pv

ii) Create a PVC for ebs .

#vim ebs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

labels:

app: mysql

name: pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: gp2

volumeMode: Filesystem

volumeName: pv-ebs-static

# kubectl apply -f ebs-pvc.yaml

# kubectl get pvc

iii) Create a Pods for ebs .

# vim nginx.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- name: http

containerPort: 80

volumeMounts:

- name: data

mountPath: /usr/share/nginx/html

volumes:

- name: data

persistentVolumeClaim:

claimName: pvc

# kubectl apply -f nginx.yaml

# kubectl get pv,pvc,pods

# kubectl exec -it nginx /bin/bash

# touch /usr/share/nginx/html/test.txt

E). Create Dynamic PVC and StorageClass for EBS AWS.

reclaimPolicy: Unsupported value: "Recycle": supported values: "Delete", "Retain"

Disk ← storageClass ← PVC ← Pods

i) Create a storageClass.

# vim storageclass.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: mystorageclass

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

iopsPerGB: "10"

fsType: ext4

reclaimPolicy: Delete

mountOptions:

- debug

# kubectl apply -f storageclass.yaml

# kubectl get sc

# vim ebs-pvc_test.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

labels:

app: mysql

name: pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: mystorageclass

# kubectl apply -f ebs-pvc_test.yaml

# kubectl get pvc

# vim nginx.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- name: http

containerPort: 80

volumeMounts:

- name: data

mountPath: /usr/share/nginx/html

volumes:

- name: data

persistentVolumeClaim:

claimName: pvc

# kubectl apply -f nginx.yaml

# kubectl get pods,pv,pvc

# kubectl describe pods nginx

F). How to Allow Multiple Pods to Read/Write in AWS EFS Storage in Kubernetes.

1.In the security group for EFS will be allowed worker nodes Ips.

2. Click Attach Option its showing the access point URL.

3.if security group change in efs:-

Network → manage → change security group.

4.install “sudo apt install nfs-common” in all node workers.

https://github.com/antonputra/tutorials/tree/main/lessons/041

# mkdir efs

# cd efs

1-namespace.yaml

2-service-account.yaml

3-clusterrole.yaml

4-clusterrolebinding.yaml

5-role.yaml

6-rolebinding.yaml

7-deployment.yaml

8-storage-class.yaml

# mkdir test

# cd test

1-namespace.yaml

2-persistent-volume-claim.yaml

3-foo-deployment.yaml

4-bar-deployment.yaml

# testing

Run below command on woker1 and worker2 and data is available in below path on woker nodes.

# mount | grep “nfs”

1️.Set up

an NFS server (if not already available)

2️.Deploy the NFS CSI Driver

3️.Create an NFS StorageClass (for dynamic provisioning)

4️.Create a PersistentVolumeClaim (PVC)

5️.Deploy a Pod using the dynamically provisioned volume

1.Set up an NFS server (if not already available)

showmount -e

<NFS_SERVER_IP>

2.Install

the NFS CSI Driver in Kubernetes.

Kubernetes CSI

(Container Storage Interface) driver for NFS.

Provisioner

Name: nfs.csi.k8s.io

# helm repo

add csi-driver-nfs

https://raw.githubusercontent.com/kubernetes-csi/csi-driver-nfs/master/charts

# helm repo

update

# helm repo

list

# helm

install nfs-csi

csi-driver-nfs/csi-driver-nfs --namespace kube-system --create-namespace

# helm list -n kube-system

Note:- If remove

repo from helm.

# helm repo

remove csi-driver-nfs

Note:- If remove

nfs csi driver

# helm uninstall nfs-csi -n kube-system

Pods is

created for CSI-NFS driver.

# kubectl

get pods -n kube-system | grep nfs

CSI

Driver is showing.

# kubectl

get csidrivers

On which

nodes is the CSI driver installed.

#kubectl get

csinodes

3️.Create an NFS StorageClass.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi

provisioner:

nfs.csi.k8s.io

parameters:

server: <NFS_SERVER_IP>

# Replace with actual NFS server IP

share: /exported/nfs-data

# Path to NFS shared directory

reclaimPolicy:

Retain

volumeBindingMode:

Immediate

allowVolumeExpansion: true

# kubectl

apply -f storageclass-nfs.yaml

# kubectl

get storageclass

Or

# kubectl

get sc

5.Create a PersistentVolumeClaim (PVC).

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

storageClassName: nfs-csi

# kubectl

apply -f pvc.yaml

# kubectl

get pvc

# kubectl

get pv

6.Deploy a Pod Using the Dynamically Provisioned Volume.

apiVersion: v1

kind: Pod

metadata:

name: nfs-test-pod

spec:

containers:

- name: busybox

image: busybox

command: [ "sleep", "3600"

]

volumeMounts:

- mountPath: "/mnt/nfs"

name: nfs-volume

volumes:

- name: nfs-volume

persistentVolumeClaim:

claimName: nfs-pvc

# kubectl

apply -f pod.yaml

# kubectl

get pods

# kubectl get pv,pvc

Verification

:-

1️.Check Mounted Storage Inside the Pod

# kubectl exec -it nfs-test-pod -- df -h /mnt/nfs

2️.Create a Test File to Verify Persistence

kubectl exec -it nfs-test-pod -- touch /mnt/nfs/testfile.txt

Check the nfs Node:

Multiple pvc

volume in nfs path.

# ls /exported/nfs-data/<volume>

# ls /exported/nfs-data/pvc-819ab3b0-18a0-4f33-beb5-747530c01957/

Note :-

1.If the pod

is deleted and recreated it will use the same PVC volumes.

2.If PVC

volumes are deleted and PVC volumes are created, a new PVC ID is created.

3. ReclaimPolicy:

Delete parameter then if PVC is deleted then PV will also be deleted. (all

data).

# kubectl

get pv,pvc

No comments:

Post a Comment