What is Jenkins ?

Jenkins is an open-source automation tool written in Java with plugins built for Continuous Integration purposes. Jenkins is used to build and test your software projects continuously making it easier for developers to integrate changes to the project. It helps automate the parts of software development related to building, testing, and deploying, facilitating continuous integration and continuous delivery. It is a server-based system that runs in servlet containers such as Apache Tomcat.

Project type name :-

1.Freestyle Project :- The freestyle build job is a highly flexible and easy-to-use option. You can use it for any type of project; it is easy to set up, and many of its options appear in other build jobs.

2.maven Project :- Build a maven project. Jenkins takes advantage of your POM files and drastically reduces the configuration.

3.pipeline :- Orchestrates long-running activities that can span multiple build agents. Suitable for building pipelines (formerly known as workflows) and/or organizing complex activities that do not easily fit in free-style job type.

i) Scripted Pipeline.

ii) Declarative pipeline.

7.Organization Folder :- Creates a set of multibranch project subfolders by scanning for repositories.

What is Job DSL?

Job DSL Plugin --> create job (seed-Job) --> seed jobs (demo job).

freestyle Project --> seed-jobs

job('demo') {

steps {

shell('echo Hello World!')

}

}

What is seed (demo job) in Jenkins ?

The seed job is a normal Jenkins job that runs the Job DSL script; in turn, the script contains instructions that create additional jobs. In short, the seed job is a job that creates more jobs. In this step, you will construct a Job DSL script and incorporate it into a seed job.

What is a pipeline and types.

Orchestrates long-running activities that can span multiple build agents. Suitable for building pipelines (formerly known as workflows) and/or organizing complex activities that do not easily fit in free-style job type.

i) Scripted Pipeline.

ii) Declarative pipeline.

1.scripted Pipeline

ii) Unlike Declarative pipeline, the scripted pipeline strictly uses groovy based syntax. Since this, The scripted pipeline provides huge control over the script and can manipulate the flow of script extensively.

node ('node-1') {

stage('Source') {

git "https://github.com/your_repo_name.git"

}

stage('Compile') {

def gradle_home = tool 'gradle4'

sh "'${gradle_home}/bin/gradle' clean compileJava test"

}

}

2.Declarative pipeline.

i) Declarative Pipeline is a relatively recent addition to jenkins Pipline which presents a more simlified and opinionated syntax on top of the Pipeline sub-systems.ii) Declarative Pipline encourages a decalarative programming model, whereas scripted Pipelines follow a more imperative programming model.

iii) Declarative type imposes limitations to the user with a more strict and pre-defned struture, which would be ideal for simpler continuous delivery piplines.

iv) scripted type has very few limitations that to with respect to structure and syntax that tend to be defined by Groovy, thus making it ideal for users with more complex requirements.

pipeline {

agent { label 'node-1' }

stages {

stage('Source') {

steps {

git "https://github.com/your_repo_name.git"

}

}

stage('Compile') {

tools {

gradle 'gradle4'

}

steps {

sh 'gradle clean compileJava test'

}

}

}

}

Sections in Declarative Pipeline typically contain.

When applied at the top-level of the pipeline block no global agent will be allocated for the entire Pipeline run and each stage directive will need to contain its own agent directive.

Execute the Pipeline, or stage, on an agent available in the Jenkins environment with the provided label.

agent { node { label 'labelName' } } behaves the same as agent { label 'labelName' }, but node allows for additional options (such as customWorkspace).

Execute the Pipeline, or stage, with the given container which will be dynamically provisioned on a node pre-configured to accept Docker-based Pipelines, or on a node matching the optionally defined label parameter. docker also optionally accepts an args parameter which may contain arguments to pass directly to a docker run invocation.

agent {

docker {

image 'maven:3-alpine'

label 'my-defined-label'

args '-v /tmp:/tmp'

}

}

Execute all the steps defined in this Pipeline within a newly created container of the given name and tag (maven:3-alpine).

// Declarative //

pipeline {

agent none

stages {

stage('Example Build') {

agent { docker 'maven:3-alpine' }

steps {

echo 'Hello, Maven'

sh 'mvn --version'

}

}

stage('Example Test') {

agent { docker 'openjdk:8-jre' }

steps {

echo 'Hello, JDK'

sh 'java -version'

}

}

}

}

1. Defining agent none at the top-level of the Pipeline ensures that executors will not be created unnecessarily. Using agent none requires that each stage directive contain an agent directive.

2. Execute the steps contained within this stage using the given container.

3. Execute the steps contained within this steps using a different image from the previous stage.

// Declarative //

pipeline {

agent any

environment {

WorkSpacePath = '/home/ec2-user/jenkins-data/jeknins_home/workspace'

JOBNAME = "${env.JOB_NAME}"

WORKSPACE = "${env.WORKSPACE}"

}

stages {

stage('Example') {

environment {

AN_ACCESS_KEY = credentials('my-prefined-secret-text')

}

steps {

sh 'printenv'

echo "$WorkSpacePath"

}

}

}

}

Available Options

i) buildDiscarder :- Persist artifacts and console output for the specific number of recent Pipeline runs. For example: options { buildDiscarder(logRotator(numToKeepStr: '1')) }

Example

// Declarative //

pipeline {

agent any

options {

timeout(time: 1, unit: 'HOURS')

}

stages {

stage('Example') {

steps {

echo 'Hello World'

}

}

}

}

NOTE :- Specifying a global execution timeout of one hour, after which Jenkins will abort the Pipeline run.

Available Parameters

i) string :- A parameter of a string type, for example: parameters { string(name: 'DEPLOY_ENV', defaultValue: 'staging', description: '') }

Example

// Declarative //

pipeline {

agent any

parameters {

string(name: 'PERSON', defaultValue: 'Mr Jenkins', description: 'Who should I say hello to?')

}

stages {

stage('Example') {

steps {

echo "Hello ${params.PERSON}"

}

}

}

}

The triggers directive defines the automated ways in which the Pipeline should be re-triggered. For Pipelines which are integrated with a source such as GitHub or Bitbucket, triggers may not be necessary as webhooks-based integration will likely already be present. Currently the only two available triggers are cron and pollSCM.

// Declarative //

pipeline {

agent any

triggers {

cron('H 4/* 0 0 1-5')

}

stages {

stage('Example') {

steps {

echo 'Hello World'

}

}

}

}

Supported Tools.

i) maven

ii) jdk

iii) gradle

// Declarative //

pipeline {

agent any

tools {

maven 'apache-maven-3.0.1'

}

stages {

stage('Example') {

steps {

sh 'mvn --version'

}

}

}

}

Note :- The tool name must be pre-configured in Jenkins under Manage Jenkins → Global Tool Configuration.

The script step takes a block of scripted-pipeline and executes that in the Declarative Pipeline. For most use-cases, the script step should be unnecessary in Declarative Pipelines, but it can provide a useful "escape hatch." script blocks of non-trivial size and/or complexity should be moved into Shared Libraries instead.

agent any

parameters {

string(name: 'NAME', description: 'Please tell me your name')

choice(name: 'GENDER', choices: ['Male', 'Female'], description: 'Choose Gender')

}

stages {

stage('Printing name') {

steps {

script {

def name = "${params.NAME}"

def gender = "${params.GENDER}"

if(gender == "Male") {

echo "Mr. $name"

} else {

echo "Mrs. $name"

}

}

}

}

}

}

The post section defines actions which will be run at the end of the Pipeline run. A number of additional post conditions blocks are supported within the post section: always, changed, failure, success, and unstable.

// Declarative //

pipeline {

agent any

stages {

stage('Example') {

steps {

echo 'Hello World'

}

}

}

post {

always {

echo 'I will always say Hello again!'

}

}

}

However, a stage directive within a parallel or matrix block can use all other functionality of a stage, including agent, tools, when, etc.

matrix {

axes {

axis {

name 'PLATFORM'

values 'linux', 'mac', 'windows'

}

}

// ...

}

agent any

stages {

stage('Initialization') {

environment {

JOB_TIME = sh (returnStdout: true, script: "date '+%A %W %Y %X'").trim()

}

steps {

sh 'echo $JOB_TIME'

}

}

}

}

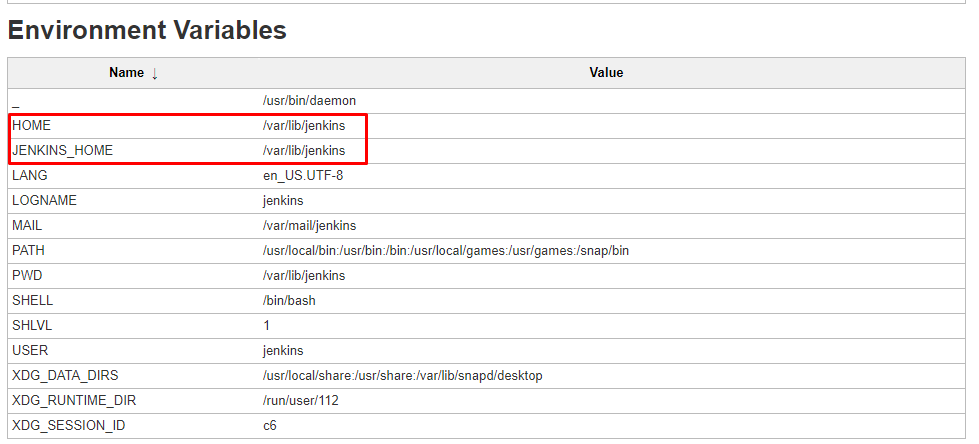

Jenkins: Changing the Jenkins Home Directory.

# sudo service jenkins stop

# mkdir /home/new_home

# sudo chown jenkins:jenkins /home/new_home/

# sudo cp -prv /var/lib/jenkins /home/new_home/

# sudo vi /etc/default/jenkins

# jenkins home location

JENKINS_HOME=/home/new_home

# sudo service jenkins start

No comments:

Post a Comment